Save-My-World

This is the Last post of my 3 Part series for Save My World. If you haven’t yet read Part 1 and Part 2, do check them out.

In Part 1, I give an overview into the application.

In Part 2, I provide more indepth explanation into the entire development process - Problem Sourcing, Design Thinking and Prototyping, Rapid Protyping with CICD, and everything else.

In Part 3, I talk about the bottlenecks of the projects and the workarounds that we used to resolve them.

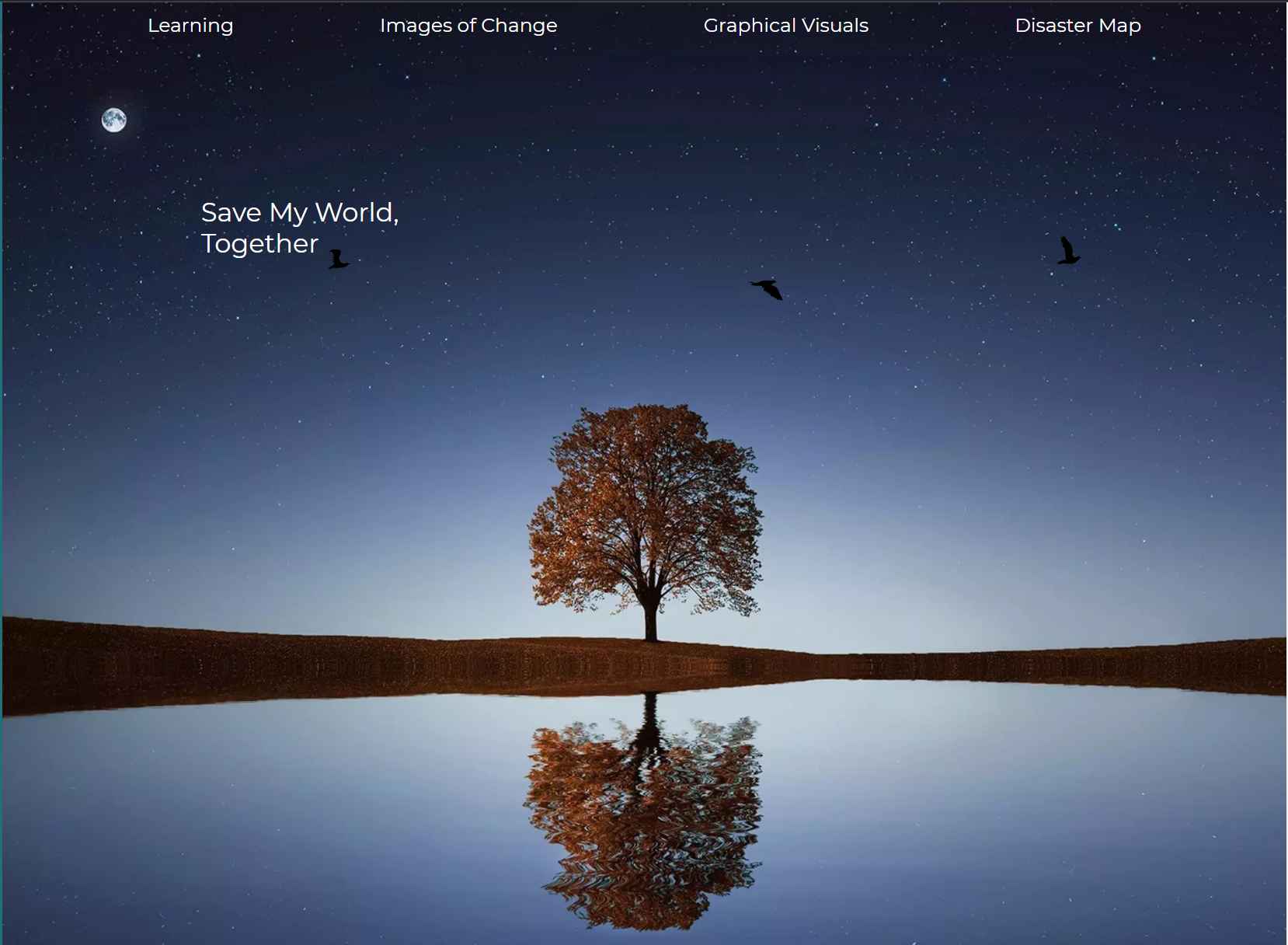

Save My World’s Landing Page

Problems and Bottlenecks Overview

During the entire developmental lifecycle, we observed a few bottlenecks that hindered our progress. Some were easily solvable, while others took a long time. In this post, I’ll go through the 3 Key Technical Problems of our application and how we resolved them. I’ll also go through some killer bugs which we didn’t manage to solve due to a lack of time. To list them down:

Problems Resolved

- Vue2 vs Vue3 compatibility for many libraries

- HTTPS to HTTP API Connectivity Error on Deployment

- Geolocation input to APIs that needed both the Lat and Long

Bugs that became Features

- Getting throttled by the ReliefWeb API

Vue2 vs Vue3 Compatibility

Vue 3 was officially released not too long ago in late 2020. Many libraries are still running under Vue2. This proves an issue when we use some libraries as we have to consider how they integrate together. With Vue 3 came the Compositions API. You can think of the Compositions API as a new way to write Vue code. It reminds me of the “React Way”, we the Composition API uses reusable code and hooks, just like how React does it. You can read more about it here.

Our course barely taught Vue, and didn’t touch on the Compositions API at all. However, because some libraries ported over to Vue3, such at Vue-ChartJS, we had to pick it up. To overcome this barrier between swtiching, we allowed our archtecture to take on both forms at the same time. As the Options API is still compatible, we integrate them both together. For major components that had to have their reactitivy controlled using the Compositions API, we stuck purely to that. Some examples were for the Chart.JS components, and Image Slide Component. I’ve attached some code snippets below so you can see what we did.

export default {

name: "App",

components: {

Vue3ChartJs,

},

setup() {

const chartRef = ref(null);

const country = ref("Singapore");

const year = ref(2020);

const isLoading = ref(false);

const chartYears = {

past: {

1920: 1939,

1940: 1959,

1960: 1979,

1980: 1999,

},

future: {

2020: 2039,

2040: 2059,

2060: 2079,

2080: 2099,

},

};

...

const updateChart = () => {

lineChart.options.plugins.title = {

text: "Updated no Chart",

display: true,

};

lineChart.data.labels = ["Cats", "Dogs", "Hamsters", "Dragons"];

lineChart.data.datasets = [

{

label: "Temperature Over Time",

backgroundColor: ["#41B883"],

borderColor: "#41B883",

data: [65, 59, 80, 81, 56, 55, 40],

fill: false,

},

];

chartRef.value.update(null);

};

Using the Compositions API

Lucky, this problem was not too hard to solve. It just required some extra late night coffee to get Vue’s reactivity system to update.

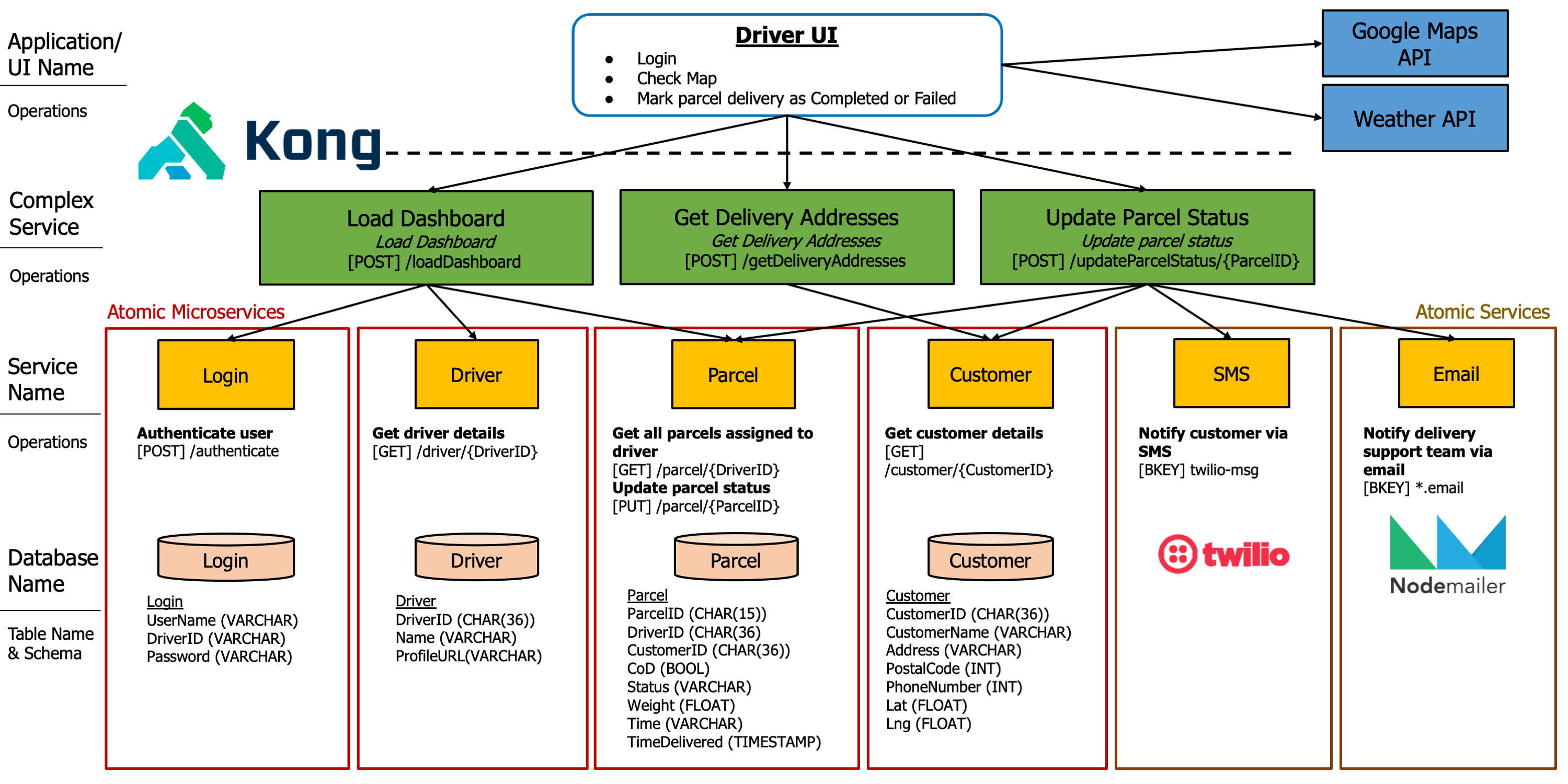

HTTPS to HTTP API Connectivity Error

Luckily, I knew that anything deployed would be different from everything seen on the localhost. I learnt it the hard way back in 2020 when I was developing sites optmized for multiple browsers. With the CICD pipeline setup, we were able to do continuous testing and we sieved out a Key Bug.

The Worldbank API can only be accessed via HTTP. However, the deployed app runs on HTTPS and there is a network handshake error when making the request. HTTPS data is encrypted but HTTP is not. We had to either:

- Find a new API or

- Find a workaround

I opted for 2 because The Worldbank API provides really good information, and I was interested in trying out how to workaround this problem. After much digging and asking friends, I decided to setup a Serverless Lambda Function, which makes the request for us. This bypasses the HTTPS error, but introduces a new CORS errors.

For those unfamiliar with CORS, you can read more about it here. Essentially, it just means that Save My World/the lambda function cannot connect to the Worldbank API because it is “Cross-Origin”, or from a different domain. CORS is an issue that can only be resolved(at least thats how I resolve it) using the ‘backend’/server.

The Serverless Compute kills 2 birds with 1 stone. However, setting it up wasn’t easy.

Something like what we used, without DynamoDB

Photo Credits: Jworks Tech Blog.

Photo Credits: Jworks Tech Blog.

To bypass the CORS error, I had to setup a two-pronged Lambda approach. Lets call them (Lambda) Functions A and B.

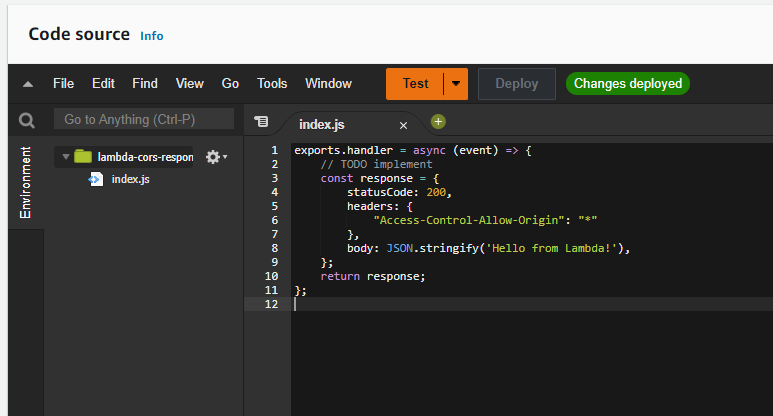

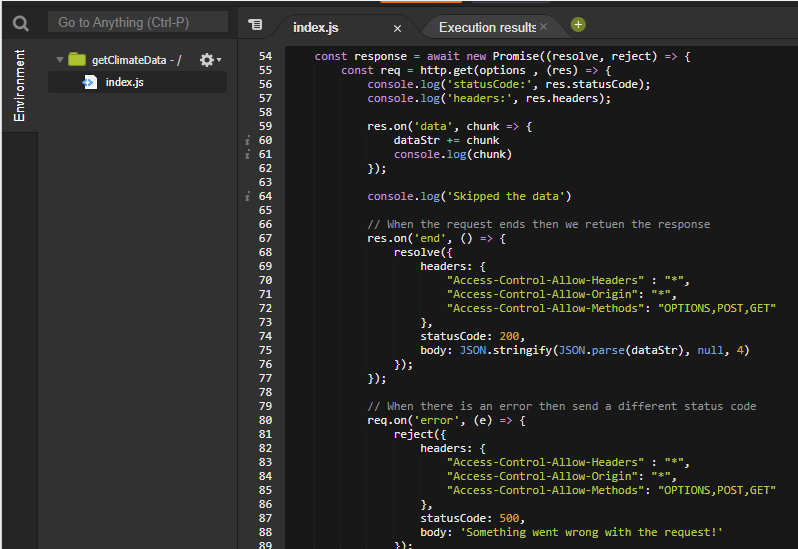

- Save My World makes request (to be more specific, it’s an Options request) to /endpoint. Because the pre-flight request is an OPTIONS request, I route it to function A.

- Function A’s Job is to return an “Access-Control-Allow-Origin”: “*” header in the Response.

- Save My World received the OPTIONS Pre-flight response and there is no more CORS error.

- Save My World then makes a POST request to Function B, which returns the necessary information.

Preflight Request is just this. Simple!

Main request uses Promises, but still pretty simple nonetheless. Nothing fancy

Doing the above brought me new indepth exposure to AWS, and that is pretty nice for self learning. It was tough setting it up and the documentaiton isnt really well written. I had to source around the web for quite abit, but managed to make it work in the end.

Geolocation Input

The last Key Problem we solved was the use of a Reverse Geolocation API. For many of the Open Source APIs, they require a Lat and Long input, and they would return data that is scoped to that specific area. This was slightly tricky at the start because (1) as my team and I were not super familiar with axios request chaining, and (2) taking in countryName isnt a good parameter because there are duplicate locations in the world with similar names.

To mitigate this issue, I adopted a reverse Geolocation API approach. As I had did some form of webscraping before, I knew that we could ‘store’ the Lat and Long details into firebase. Then, we can set up a simple API which lets us get the Geolocation details using an input. I set it up and chose to go with the iso3 input for the country because it is widely known, and some of the open-source APIs that we were using also required that.

A problem that arised was that many Geolocation APIs were very costly, and free API calls were limited. I implemented both, a firebase version where we pre-store the geolocation details, and also implemented the locationIQ API. I chanced upon this API and found that it had quite a generous monthly limit, and was easy to use and integrate. I also imported a library which could convert country names to the other ways of identification (ISO3) etc. All in all, this was an easy problem to solve.

The one bug that became a feature

We now move on to the final section: “The bug that I couldn’t solve”. This has something to do with the reliefweb API. We used the reliefweb API to get disaster locations, as the initial plan was to integrate Mapbox with this API and show that disasters were increasing throughout the years. As our Earth gets more globalized and industralized, the trend shows that an increasing number of disasters have been increasing.

The reliefweb API has an API call limit of 1000 calls per second. or somewhere around that timeframe. This wouldnt have been a problem as we only just needed the Lat and Long of the disasters. We would then use the Lat and Long to output markers on Mapbox. Only when you click on a specific marker would we then make another API call to get the disaster details from reliefweb. However, reliefweb doesnt give u the entire base of disasters. They use the HATEOAS RESTAPI architecture. When we get their API, we need to make additional calls into each disaster’s url link to get its Lat and Long.

Snippet of the reliefweb API

{

"time": 10,

"href": "https://api.reliefweb.int/v1/disasters?appname=apidoc",

"links": {

"self": {

"href": "https://api.reliefweb.int/v1/disasters?appname=apidoc"

},

"next": {

"href": "https://api.reliefweb.int/v1/disasters?appname=apidoc&offset=10&limit=10"

}

},

"took": 3,

"totalCount": 3252,

"count": 10,

"data": [

{

"id": "50474",

"score": 1,

"fields": {

"name": "Typhoon Molave - Oct 2020"

},

"href": "https://api.reliefweb.int/v1/disasters/50474"

},

{

"id": "50470",

"score": 1,

"fields": {

"name": "Lao PDR: Floods and Landslides - Oct 2020"

},

"href": "https://api.reliefweb.int/v1/disasters/50470"

},

... omitted the rest of the code

Hence, when we are making so many calls (because there are about 3300 total disasters), we end up getting throttled. There were 2 ways to workaround this.

- We make the calls in batches of 800-900, with timeout (interval) inbetween them.

- We initially only make about 800-900 calls. This should cover the disasters for the years 2019-2021. We then make additional calls when the user selected another year, if the year hasnt already been loaded.

2 seems like the right architecture because it increases the initial page load speed. However, it was difficult and funky to implement. Hence, I went with 1. Because reliefweb uses the HATEOAS architecture, I used a Promise.all implementation to make 800-900 concurrent axios requests. We would loop through the first API call, and make separated API calls to other the sublinks. This was what brought out the throttling, even though it gave us a huge performance increase in page load speend.

2 points to note regarding this design:

- We could not make synchrousnous calls, even though this would have enabled all 3000 disastes to shown, because it would take too long. It would take close to a minute of 2 for us to load everything in totality.

- Due to Promise.all, when one of the request fails, everything stops and we reject the Promise immediately. I know that we can delay or handle rejections using another approach, but I wasnt able to set this up.

The second point results in some API calls failing halfway as we get throttled. Hence, it results in something like:

- 2010 - 2021: Full data

- 2000 - 2010: Data fails here as we get throttled

- 1970 - 2000: We can get the data here, because the throttling is over

Knowing this, I decided to implement a settimeout/setinterval approach, to place a timer inbetween how I make the concurrent batches of 800-900 API calls. However, The key issue I faced with this architecture was because of Javascript’s Event Loop.

Introduction to what is Javascript’s Event Loop

Javascript is a single-threadded synchronous language. However, the Event Loop does some “magic” and gives the impression that Javascript is not. Because of this Event Loop, the time input to settimeout and setinternval will infact become a minimum bound. So if your timeout is set for 10 seconds, its minimally 10 seconds, and not guaranteed to be 10 seconds exact.

The intervals and timeouts were not working. The timeouts were not I/O blocking, so I could not segregate the batches. This was different as how I did it using React. With React, it is much easier to control such intervals using your own custom React Hook (like a useInterval hook). I was unsure if it was also due to Vue’s reactivity system which causes the timeout to bug out, or if it was due to Mapbox itself. The timeout was implemented as a part of a mapbox initialisation function, and there were many dependencies involved.

Due to the lack of time, I was unable to pinpoint the crux of the issue, but I did narrow it down to the Event Loop. I have a feeling that this non-block behavior exists because Javascript appends all the set-timeout functions to the Callback Queue at once.

In the end, we chose to present the disasters from 2010 - 2021 as we were out of time for the project.

Wrapping up

With that, we come to the end of my 3 part series for Save My World. I am happy that we did very well for the project, and we were one of the better groups. It was a great experience for me working collaboratively with others, and I thoroughly enjoyed experiementing with implementing a truly responsive web application (that has always been my weakness but I can say that I am much more confident now).

I hope that you have enjoyed my Save My World series, and do let me know what other things you would like to see.