Photo credit: Christopher Gower

This is Part 1 of the Intro to System Design. These are my personal notes which I have compiled from various resources while learning about the basics System Design. Hopefully this benefits you as well.

Scaling - Horizontal vs Vertical

Horizontal Scaling is the ability to scale your severs by buying new servers, while Vertical Scaling deals with increase the ‘strength’ or ‘load’ of your current server.

| No. | Horizontal Scaling | Vertical Scaling | Category |

|---|---|---|---|

| (1) | Load Balancing Required | N/A | Load Balancing |

| (2) | Resilient | Single Point of Failure | Failures |

| (3) | Network Calls (RPC) | Inter Process Communication | Communication |

| (4) | Data Inconsistency | Data is Consistent | Data |

| (5) | Scales well as users increases | Hardware Limit | Limits |

Both vertical and horizontal scaling are used in the real world

Hybrid solution to combine both is that each machine has a huge initial server

Introduction to Load Balancing?

Imagine you have N number of servers. How would we know, for each new incoming Web Request that we’re receiving, which server should we send the request to? That is what a Load Balancer does. Load Balancers help us route requests in an optimal manner and distribute the weight across multiple servers.

How does it work?

It starts with allocating a Uniformly Random Request ID from 0 to N-1 where N is the total number of servers. For each request ID Ri, we proceed to do a hash on this ID to get a hashed number mi. h(r1) => m1. Each number m1 can be mapped to the different servers as you take m1 % n and whatever result you get, we send it to the server whose index matches the result. This concept is called Consistent Hashing.

Because this hash function is uniformly random, we can expect all servers to have the same load. So, what happens when we need to add more servers? How does this affect our hash function and Load Balancer?

Recall that h(r1) => m1, and server index = m1 % N, where N is the total number of servers. However, now that N has changed, the server index will change accordingly.

Things to avoid

- Huge changes in the server indexs that you are serving

Consistent Hashing

Message Queues

Used in asynchronous systems. We have a set of servers, and we need a database to store the details of the server. We can’t just store the server details in memory because once a server goes down, the data will not persist.

- Servers are processing jobs in parallel.

- A server can crash. The jobs running on the crashed server still needs to get processed.

- A notifier constantly polls the status of each server and if a server crashes it takes ALL unfinished jobs (listed in some database) and distributes it to the rest of the servers. Because distribution uses a load balancer (with consistent hashing) duplicate processing will not occur as job_1 which might be processing on server_3 (alive) will land again on server_3, and so on.

- This “notifier with load balancing” is a “Message Queue”.

A notifier sends a response to each server every xx seconds to check if the server is down or not. If server does not response, it is assumed that the server is dead. We then query the DB for the information about the server that went down and distribute it to other servers.

A message queue takes tasks, persists them, assigns them to a server, and regularly checks the server if it is down or not. If yes, it proceeds to reassign tasks to other servers. Message queues are important in System Design because you encapsulate the complexity into just one thing. Some examples/libraries are Rabbit MQ or Zero MQ or JMS (Java Messaging Service).

Microservice vs Monolith architecture

Monolithic

Huge systems. You can can horizontally scale out a monolith.

Pros:

- When put under alot of load, it scales out easily.

- When you have a small team, its good because you dont have the time to break it up into a microservice.

- lesser moving parts. DO not need to wnoder how to break it into different sytems.

- There might be testing code or setup code. You dot no need to duplicate this.

- This is faster because its not a RPC call. All logic is in the same box and you need to make a local call.

Cons:

- if there is a new member, they need lots of context to understand. need to go through the wholr codebase.

- Deployment is going to be very large, and everytime yo change a codebase, the deployment needs to redeploy.

- Too much repsonsibility on each server. Single Point of Failure. If 1 server crashes, everything also crashes.

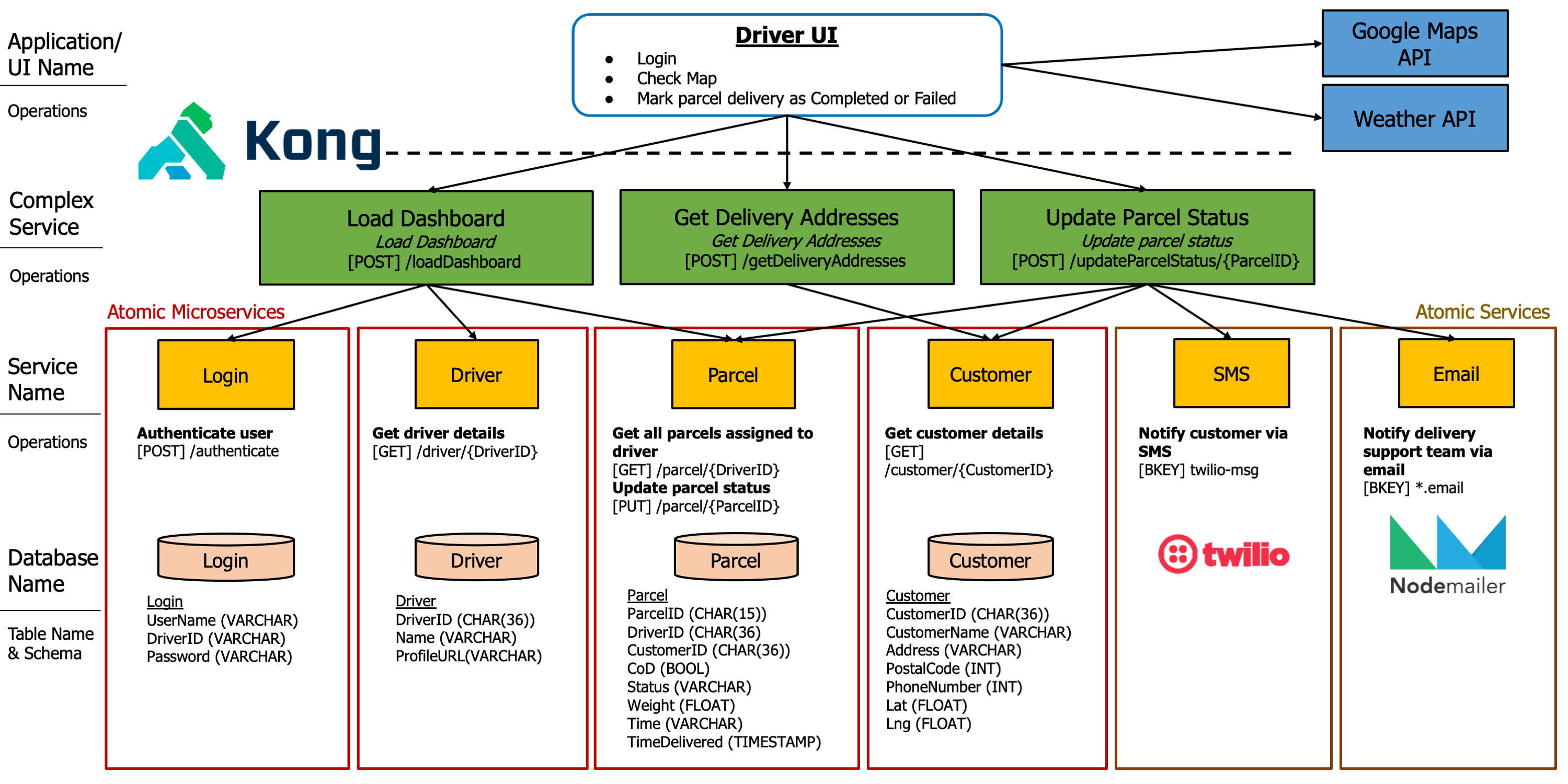

Microservices

A single business unit. All functions which are related to a single unit belong to one microservice. The client may not be talking to the microservice, but talking to a gateway instead.

Pros:

- It is easier to scale.

- Whenever there is a new developer, they just need to know a context of a single sevice to start on it.

- Parallel development is easy because there is lesser dependencies for each small service as compared to monoliths.

- Lesser parts that are hidden when you are deploying the service. If you see alot of load on a service, you can scale it out.

Cons:

- Not easy to design. It needs a smart architect to design it well.

- A good indicator that you are having a microservice without needing one, is that if Service_A is talking to Service_B only.

You may need to justify why you are using a microsercie architecture during a interview.

For large systems, usually microservices are better. Stack Overflow uses the Monolith Architecture

Database Sharding

Notes to self:

- Sharding is basically a hierarchical way to index databases.

- One problem is that you have to split the database somehow. What do you split on?

- You only shard shards when the shard grow too big.

- When shard fails you use the master/slave architecture. Writes always go to master, reads are distributed across the slaves. When the master fails one of the slaves become master.

Horizontal vs Vertical Partitioning.

Sharding is splitting up the DB. This helps to improve read and write performance. Things to take note:

- Consistency

- Availability

What should you shard your Database on?

Problems:

- Joints across shards. If the data we need lies within 2 shards, it is very expensive to make the database calls.

- Inflexibility between the different shards as you have a fixed number of shards. You can further break up each shard into mini shards (this technicque is called hierarchical sharding) to solve this. -> Consistent hashing solves this. MEMCACHED uses consistent hashing.

- Shard failure

What happens when shard fails? Master Slave architecture. You have a lot of slaves which continuously copying the master. The master is the updated copy, and the slaves are always polling to the master. When the master fails, the slaves choose 1 master amongst themselves.